October 4th, 2018 | by Krzysztof Krzemień

.NET Developer Days 2018: Artificial Intelligence and Transformations

Table of contents

The .NET Developer Days 2018 took place September 18th-19th at EXPO XXI in Warsaw. 40 sessions and 900 attendees later, we know that our trip to the capital of Poland was not only necessary but also productive. This is how you make a conference!

.NET Developer Days 2018 Summary

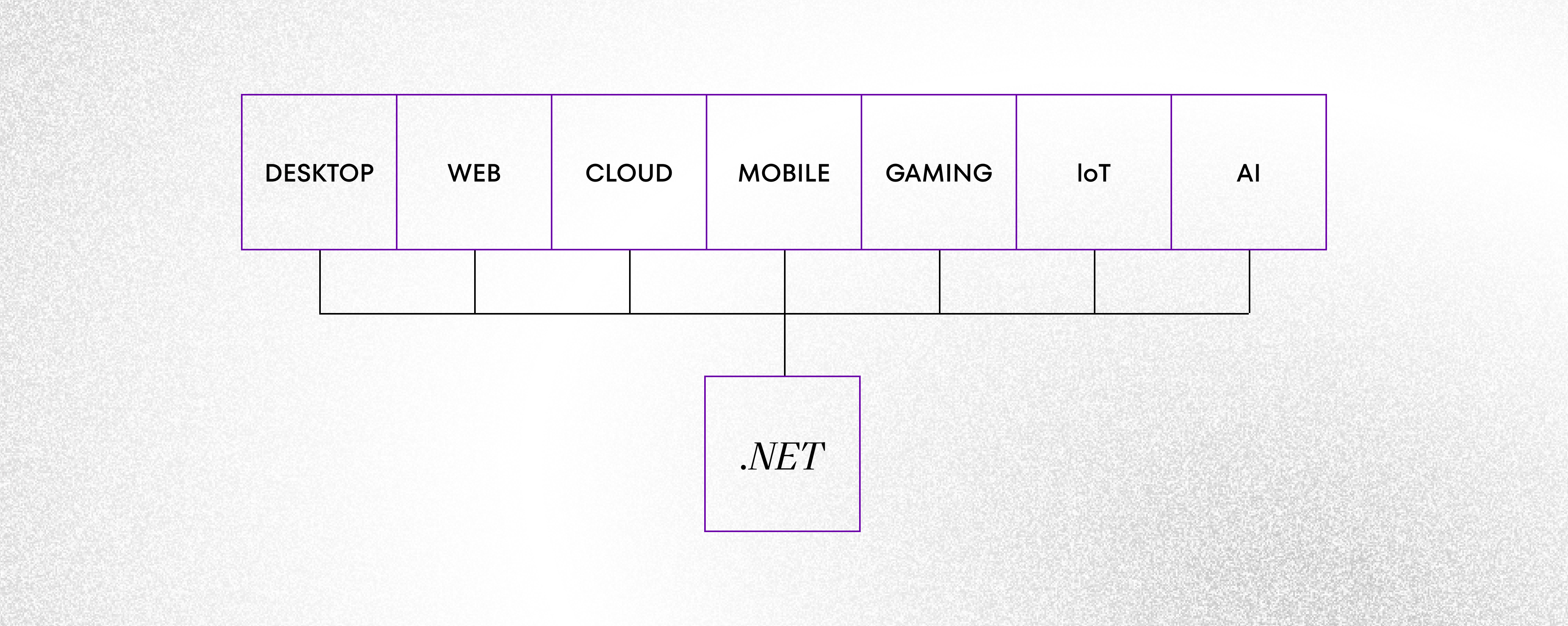

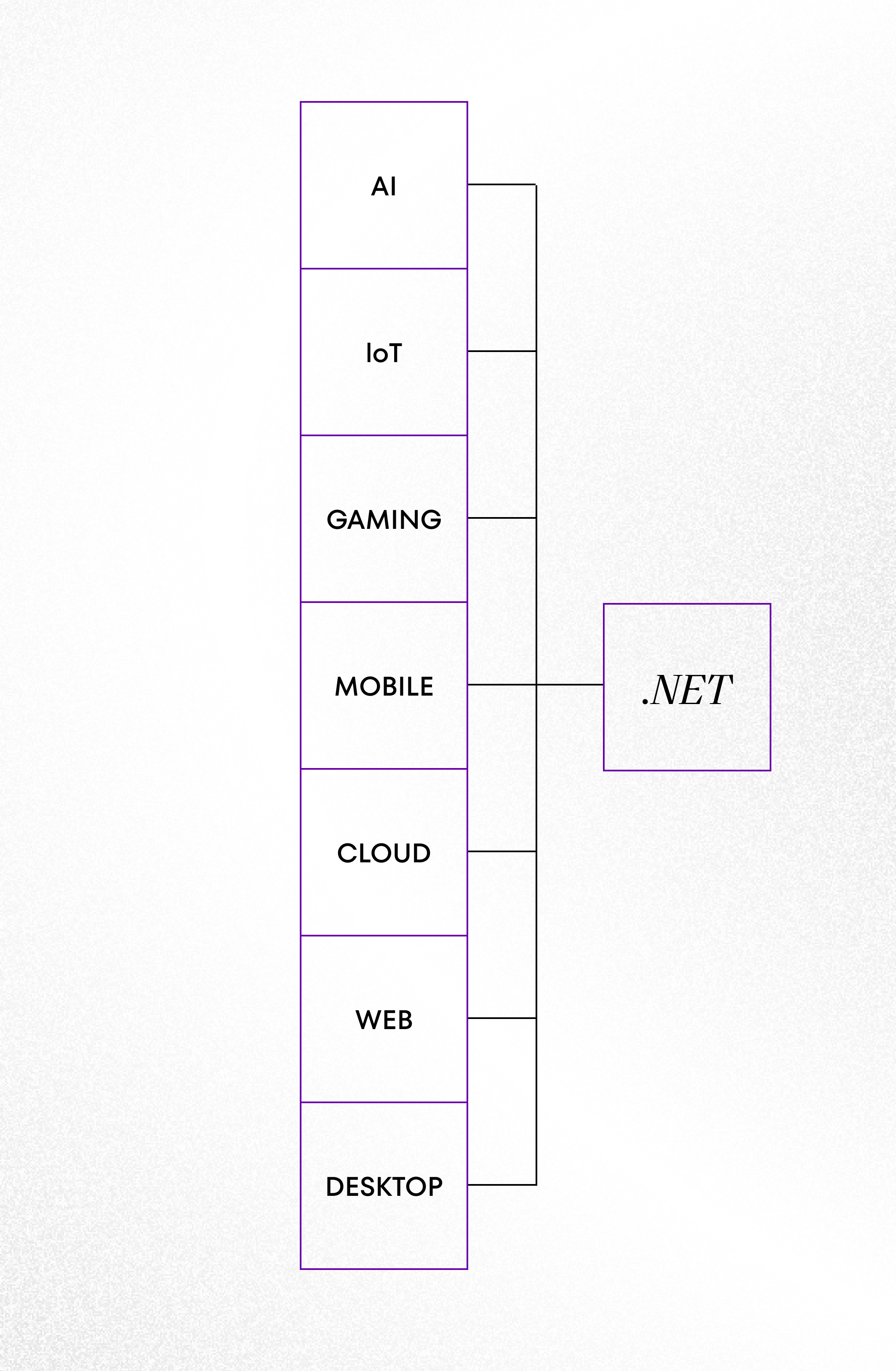

First things first. You can definitely observe emerging trends and ways the .NET framework is evolving and developers with it. The .NET Developer Days 2018 was a clear sign of that. There was a pool of topics present throughout the entire conference; hence they are here to stay:

- The .NET framework application containerization inside the Docker environment

- Artificial Intelligence (AI), Machine Learning (ML), and Virtual Reality (VR) – voice, text, and image recognition

- Computer systems architecture, based especially on services

Opening keynote by Scott Hunter

Let’s start with the opening keynote by Scott Hunter. He focused on the latest technologies that are currently under development or nearly completed. Teams working on development or .NET are working hard, and Hunter assured us that the number of platform users is growing each month, back-to-back. Technology has been widely adopted by many companies and developers, and it’s used in many branches of software development. Last year over 1 mln new and active developers joined the .NET community, and the framework itself is installed on millions of new PC units.

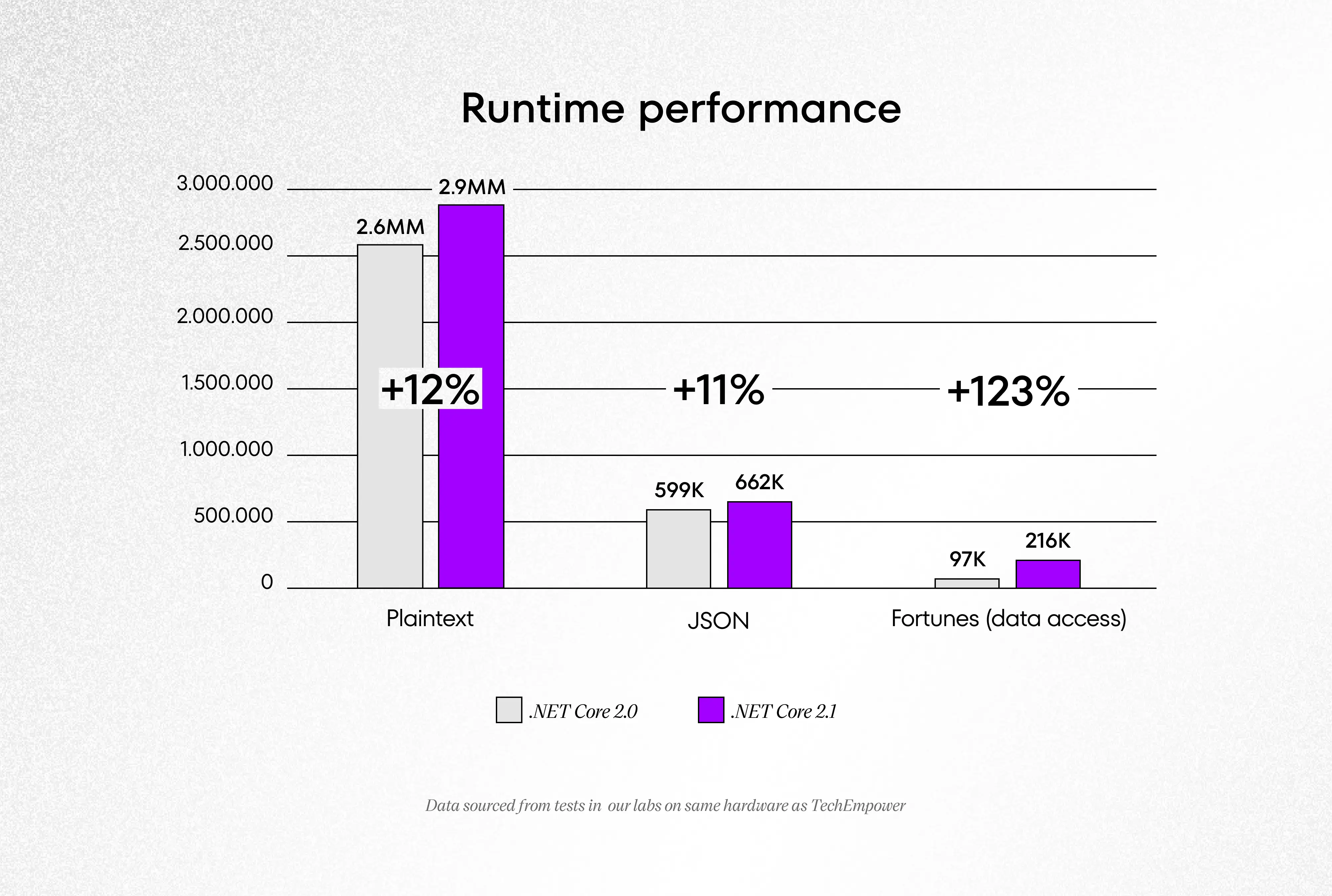

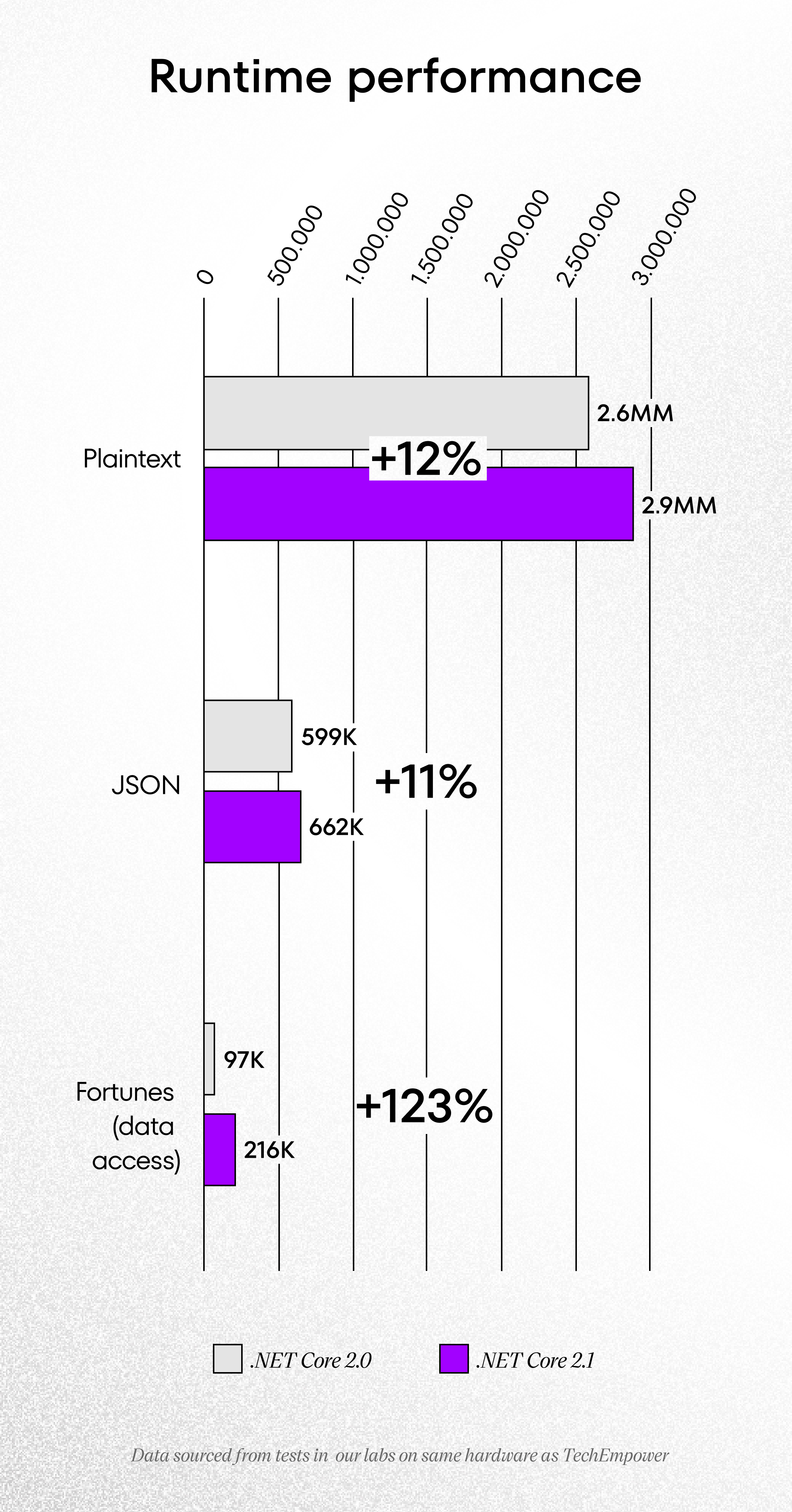

Next, Hunter talked about .NET CORE 2.1. New implementations are coming, along with performance optimization.

During the keynote, Hunter’s team presented a demo of the Blazor technology – a brand new framework that allows for building web client applications with C#, Razor, and HTML run within a browser, with the help of WebAssembly. Blazor will help simplify Single Page application development since there will be no need to use JavaScript frameworks. Although Blazor is often described as an ‘experimental’ framework, it clearly shows that Microsoft is aiming for development speed and efficiency. More of that ‘experimental’ mindset, please!

The other demo was for Live Share technology – an interesting piece of code that allows for easy sharing of your own code to work on this code. Sounds complicated? It’s not – there is an editor, there is a team, you work real-time on the same code by sharing an editor screen. Just post a comment next to the code, please. Yeah, even when you share the code in real-time. Yes, like always. That should be the law. The best part? No matter what application you’re working on and what language you’re using to build it. There’s no need for the same operating system! You use Visual Studio for that and then edit and debug real-time without repository cloning or environment configuration.

Sasha Goldstein’s keynote

Sasha Goldstein presented a keynote titled “Squeezing the Hardware to Make Performance Juice.” A very advanced and interesting presentation on increasing calculation efficiency throughout the whole application running cycle. When an application kills the processor by using its 100% capacity, its life can be saved by running parallel calculations with multiple threads that can be made at the same time with multiple processor cores. Obvious, right? Goldstein focused his efforts to show how to write algorithms used in mathematics, physics, video games, and computer graphics to use all ‘execution unit’ ports more efficiently. You can achieve that goal by using parallelization and vectorization. In plain English – you can program an algorithm in such a way that you achieve a parallel run of some mathematical equations on a single core. You can also introduce decision-making problems in such a way that the cache available to the processor can be better used for managing indirect results.

Neal Ford’s keynote

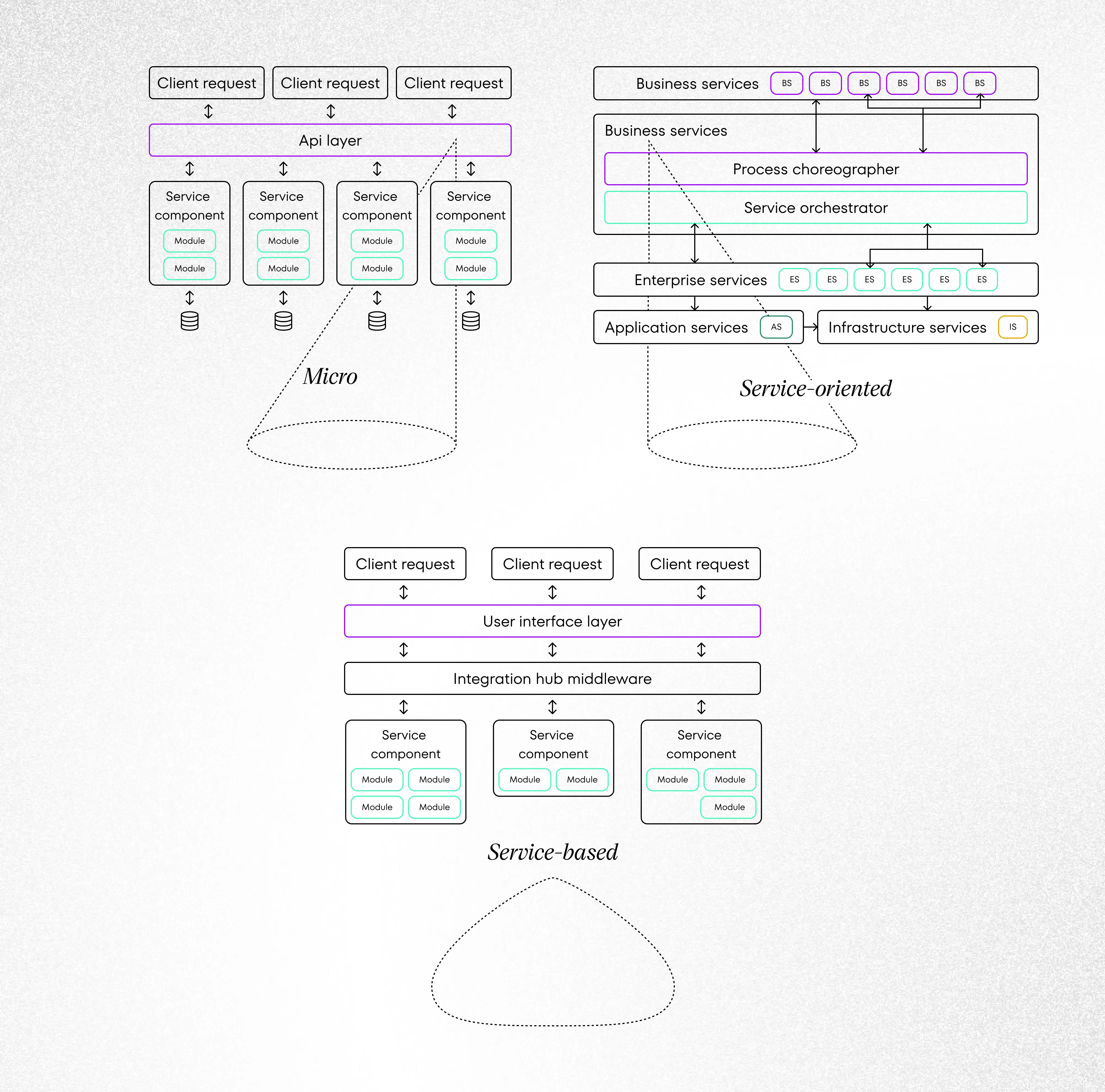

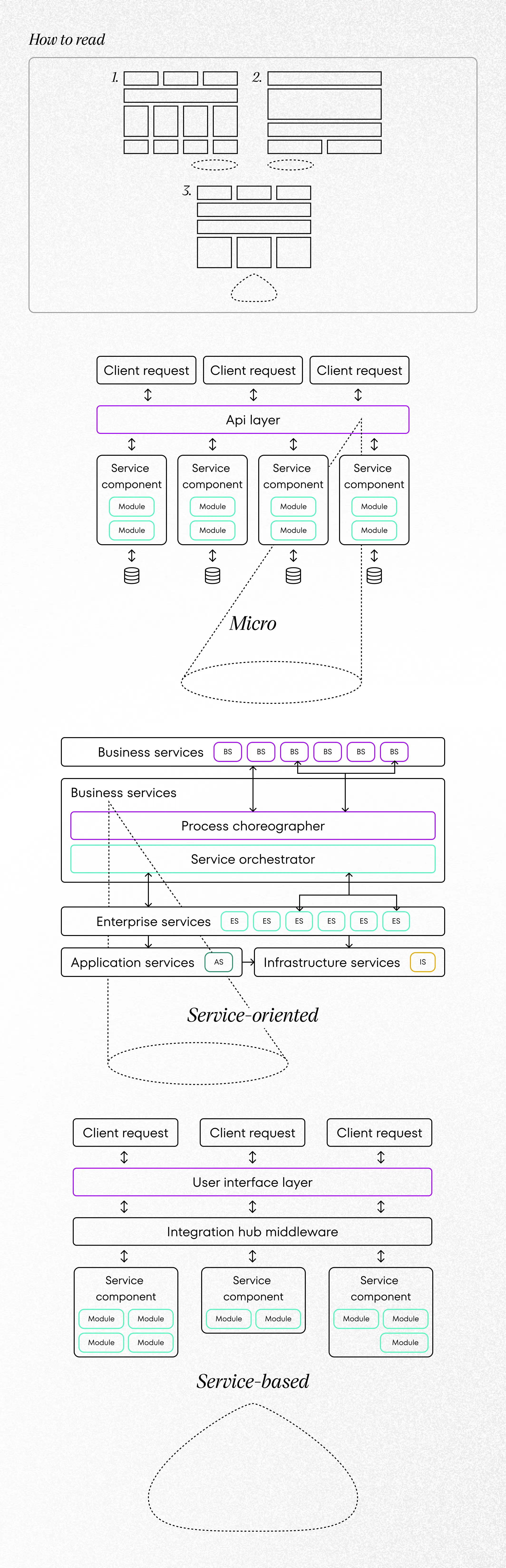

Neal Ford presented a fascinating problem. Titled “Comparing Service-Based Architectures,” the keynote focused on architectures for building applications based on services: monolithic, service-based, service-oriented, and microservices. The keynote broke down the last three. The presentation’s point was to point out which one of them is the best and which one you should choose. Unfortunately, the right answer is: it depends. Read the whole presentation to know more about service-based architectures.

Noelle LaCharite’s keynote

Noelle LaCharite presented “Designing Voice-Driven Apps With Alexa, Cortana and Microsoft AI.” This was basically a tech demo for Alexa, Cortana, and Microsoft AI. LaCharite is sure that soon, conversations with artificial intelligence will go way beyond simple voice commands and pre-programmed talks with chatbots. According to her, this branch of software development is still in diapers and presents a low entry level for many developers; hence it’s worth paying attention and decide on a career change. Watch the sample video about voice-driven apps and software development on her channel to know more.

Gill Cleeren’s keynote

Gill Cleeren showed a presentation called “Building Enterprise Web Applications With ASP.NET Core MVC.” He talked about functionalities embedded into the newest version of the ASP.NET Core MVC. They protect the website against the most popular hacker attacks. They are:

- XSS (Cross-Site Scripting). You can prevent the website from using Razor and HTML encoding

- CSRF (Cross-Site Request Forgery). For protection, use AntiForgeryToken

These techniques are commonly known, but the author presented their implementation and current state of use within the newest framework version.

Gil Cleeren continued the topic with “Building A Mobile Enterprise Application With Xamarin.Forms, Docker, MVVM and .NET Core”. In this part of the presentation, Cleeren focused on the analysis of the code delivered by Microsoft developers – The eShop on Containers. The presentation’s goal was to point out specific places in the app (mostly mobile, made with Xamarin) that fix potential challenges with this project’s development and are responsible for: dependency injection, loose coupling, MVVM pattern implementation (with a lot of generic mechanisms).

Kevin Nelson’s keynote

Next is one of the most interesting keynotes! Kevin Nelson talked about technologies from Google that help developers with developing intelligent solutions. Available APIs for REST developers:

- Cloud Translation

- Cloud Vision Label & Web Detection – you can figure out what item is presented on the photo, what brand it is, and if it’s a unique item – the name itself and current location on the world’s map (a demo showed Ford England from Harry Potter and The Chamber of Secrets). OCR Logo Detection Landmark Detection – the demo showed The Eiffel Tower in the picture, despite being shown only its fragment. After reformatting the picture to reflect better quality, it was obvious that it is actually a copy based in Las Vegas Crop hints Explicit Content Detection.

- Cloud Natural Language

- Cloud Speech

- Cloud Video Intelligence

- Cloud AutoML. Here Nelson showed a demo with hundreds of cloud photos. Based on them and expert knowledge given to AI, Nelson taught it to assess what is the probability of the rain based on shapes and types of clouds.

Michał Komorowski’s keynote

Michał Komorowski, with “Will AI Replace Developers?” asked the fundamental question. So will it? The finding is simple – not for a very long time. There are projects (e.g., Prophet) that are aiming for the change in that department, but it’s not that simple. The more probable solution is the creation of a repository for developers that will contextually hint solutions based on the project that a developer is working on.

Donovan Brown’s keynote

Donovan Brown and his “Enterprise Transformation” was probably the most motivating keynote at the entire conference. Brown showed his opinion on what DevOps is, basing his argument on Team Foundation Server (TFS) and its transformation from a mediocre product into a full-blown and cooperative team in Microsoft, developing a great product, working on the current version of the Visual Studio Team Services. According to Brown, DevOps is ‘the union of people, process, and products to enable continuous delivery of value to our end users.

The inspiration comes from shocking methods implemented by Brown in order to increase productivity, effectiveness, and reporting. Imagine that Brown makes sprint planning and sprint review by… an email! In the broader perspective, the team uses long-term planning with the question in mind: Based on the accessible, internal and external knowledge, how should our product look in the next 18 months? Then they divide this period of time into 3 ‘seasons’, each lasting 6 months. Each ends with something called Microsoft Build Conference. Teams ask themselves: ‘What do we want to achieve until the next build?’ and start planning. After each season, the planning restarts, meaning you can change the entire vision every 18 months to stay up-to-date.

You can watch the entire presentation on ‘Enterprise Transformation’ from another meetup here.

Tim Huckaby’s keynote

The last presentation was truly fantastic! Tim Huckaby’s keynote “The Vision of Computer Vision: The Bold Promise of Teaching Computers to See” was based on tech demos of the newest technologies using real-time analysis and image modification.

The first demo touched on the topic of augmented reality (AR) that can be browsed via Microsoft HoloLens or a smartphone. Microsoft wants advanced 3D models to display on the screen and be presented in 3D ‘space’ outside of the screen. The goal here is to display them in precise spots and to be able to talk to these avatars in any given language. Any thoughts about the similarity of this idea and virtual assistants like Cortana from the video game series Halo or virtual assistants from Mass Effect saga?

Microsoft wants to trigger these images by few things:

- Code Based – Bar Code, QR Code, Etc.

- Spatial – visual mapping of the objects and location, marker-less.

- Geo-Fence Based – GPS Coordinates, marker-less.

- Computer Vision Based – recognition of markers by computer vision.

The next topic had to do with movies. Microsoft was asked to help with the problem of not objective enough Hollywood Movie Screenings. Random people are frequently asked to watch a movie and tell if it’s interesting and edited well enough. The problem was that the group of random people weren’t objective enough, plus it was a nightmare to pull any decent information about particular scenes and camera angles. Thanks to Microsoft’s application (one person, one movie, one camera on the face to track interest), there’s an opportunity to track emotions and interest levels. Not to mention the eyeballs themselves. All the data is then linked to particular movie frames, building an enormous database.

Next, Huckaby talked about interactive advertisements. Currently, there are stillages under development with dedicated software. Personalized advertisements will be shown depending on who is standing in front of it, what’s in his or her basket, and what mood he or she displays on the face. The content will also change depending on our proximity and attention we give to the stand.

Last but not least – an overview of a real-time object and people recognition. These are the systems designed for situations when we look for a certain person (wanted for crimes or potentially dangerous, for example, carrying a gun). There was a demo where a room full of construction equipment and a worker was searched for a particular item and a person that took it. A similar presentation on computer vision can be found here.

Last thoughts

The conference was amazing. Very inspirational, full of practical knowledge and real-life situations where hardware and software alike cooperate to solve the problems of today’s challenging business and work environment. It was essential for a software product development company like ours. We like to attend this kind of conference to improve our product development services. We are already looking forward to the next year’s edition!